- Read more about A single-channel noise reduction filtering/smoothing technique in the time domain

- Log in to post comments

- Categories:

41 Views

41 Views

- Read more about MONAURAL SINGING VOICE SEPARATION WITH SKIP-FILTERING CONNECTIONS AND RECURRENT INFERENCE OF TIME-FREQUENCY MASK

- Log in to post comments

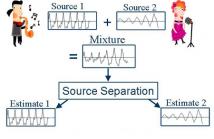

Singing voice separation based on deep learning relies on the usage of time-frequency masking. In many cases the masking process is not a learnable function or is not encapsulated into the deep learning optimization. Consequently, most of the existing methods rely on a post processing step using the generalized Wiener filtering. This work proposes a method that learns and optimizes (during training) a source-dependent mask and does not need the aforementioned post processing step.

- Categories:

5 Views

5 Views

- Read more about MULTI-SCENARIO DEEP LEARNING FOR MULTI-SPEAKER SOURCE SEPARATION

- Log in to post comments

Research in deep learning for multi-speaker source separation has received a boost in the last years. However, most studies are restricted to mixtures of a specific number of speakers, called a specific scenario. While some works included experiments for different scenarios, research towards combining data of different scenarios or creating a single model for multiple scenarios have been very rare. In this work it is shown that data of a specific scenario is relevant for solving another scenario.

- Categories:

10 Views

10 Views

Live concert recordings consist in long multitrack audio samples with significant interferences between channels. For audio engineering purposes, it is desirable to attenuate those interferences. Recently, we proposed an algorithm to this end based on Non-negative Matrix Factorization, that iteratively estimate the clean power spectral densities of the sources and the strength of each in each microphone signal, encoded in an interference matrix. Although it behaves well, this method is too demanding computationally for full-length concerts lasting more than one hour.

output.pdf

- Categories:

14 Views

14 Views

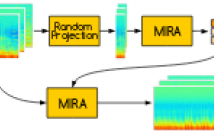

- Read more about A Simple and Effective Framework for A Priori SNR Estimation

- Log in to post comments

- Categories:

88 Views

88 Views

- Read more about Bayesian anisotropic Gaussian model for audio source separation

- Log in to post comments

In audio source separation applications, it is common to model the sources as circular-symmetric Gaussian random variables, which is equivalent to assuming that the phase of each source is uniformly distributed. In this paper, we introduce an anisotropic Gaussian source model in which both the magnitude and phase parameters are modeled as random variables. In such a model, it becomes possible to promote a phase value that originates from a signal model and to adjust the relative importance of this underlying model-based phase constraint.

- Categories:

14 Views

14 Views

- Read more about Source-Aware Context Network for Single-Channel Multi-speaker Speech Separation

- Log in to post comments

Deep learning based approaches have achieved promising performance in speaker-dependent single-channel multi-speaker speech separation.However, partly due to the label permutation problem, they may encounter difficulties in speaker-independent conditions. Recent methods address this problem by some assignment operations. Different from them, we propose a novel source-aware context network, which explicitly inputs speech sources as well as mixture signal.

version2.pdf

- Categories:

34 Views

34 Views

- Read more about Vectorwise coordinate descent algorithm for spatially regularized independent low-rank matrix analysis

- Log in to post comments

Audio source separation is an important problem for many audio applications. Independent low-rank matrix analysis (ILRMA) is a recently proposed algorithm that employs the statistical independence between sources and the low-rankness of the time-frequency structure in each source. As reported in this paper, we have developed a new framework that enables us to introduce a spatial regularization of the demixing matrix in ILRMA.

- Categories:

60 Views

60 Views

- Read more about Geometric Information Based Monaural Speech Separation Using Deep Neural Network

- Log in to post comments

- Categories:

87 Views

87 Views