- Read more about Augmentation strategy optimization for language understanding

- Log in to post comments

- Categories:

4 Views

4 Views

- Read more about Augmentation strategy optimization for language understanding

- Log in to post comments

- Categories:

7 Views

7 Views

Humans express ideas, beliefs, and statements through language. The manner of expression can carry information indicating the author's degree of confidence in their statement. Understanding the certainty level of a claim is crucial in areas such as medicine, finance, engineering, and many others where errors can lead to disastrous results. In this work, we apply a joint model that leverages words and part-of-speech tags to improve hedge detection in text and achieve a new top score on the CoNLL-2010 Wikipedia corpus.

- Categories:

28 Views

28 Views

- Read more about PROGRESSIVE DIALOGUE STATE TRACKING FOR MULTI-DOMAIN DIALOGUE SYSTEMS

- Log in to post comments

There are two critical observations in multi-domain dialogue state tracking (DST) ignored in most existing work. First, the number of triples (domain-slot-value) in dialogue states generally increases with the growth of dialogue turns. Second, although dialogue states are accumulating, the difference between two adjacent turns is steadily minor. To model the two observations, we propose to divide the task into two successive procedures: progressive domain-slot tracking and shrunk value prediction.

- Categories:

20 Views

20 Views

- Read more about Novel realizations of speech-driven head movements with generative adversarial networks

- Log in to post comments

Head movement is an integral part of face-to-face communications. It is important to investigate methodologies to generate naturalistic movements for conversational agents (CAs). The predominant method for head movement generation is using rules based on the meaning of the message. However, the variations of head movements by these methods are bounded by the predefined dictionary of gestures. Speech-driven methods offer an alternative approach, learning the relationship between speech and head movements from real recordings.

- Categories:

28 Views

28 Views

- Read more about Combining Acoustics, Content and Interaction Features to Find Hot Spots in Meetings

- Log in to post comments

Involvement hot spots have been proposed as a useful concept for meeting analysis and studied off and on for over 15 years. These are regions of meetings that are marked by high participant involvement, as judged by human annotators. However, prior work was either not conducted in a formal machine learning setting, or focused on only a subset of possible meeting features or downstream applications (such as summarization). In this paper we investigate to what extent various acoustic, linguistic and pragmatic aspects of the meetings, both in isolation and jointly, can help detect hot spots.

- Categories:

15 Views

15 Views

- Read more about WHAT IS BEST FOR SPOKEN LANGUAGE UNDERSTANDING: SMALL BUT TASK-DEPENDANT EMBEDDINGS OR HUGE BUT OUT-OF-DOMAIN EMBEDDINGS?

- Log in to post comments

Word embeddings are shown to be a great asset for several Natural Language and Speech Processing tasks. While they are already evaluated on various NLP tasks, their evaluation on spoken or natural language understanding (SLU) is less studied. The goal of this study is two-fold: firstly, it focuses on semantic evaluation of common word embeddings approaches for SLU task; secondly, it investigates the use of two different data sets to train the embeddings: small and task-dependent corpus or huge and out-of-domain corpus.

- Categories:

24 Views

24 Views

- Read more about Multitask Learning with Capsule Networks for Speech-to-Intent Applications

- Log in to post comments

Voice controlled applications can be a great aid to society, especially for physically challenged people. However this requires robustness to all kinds of variations in speech. A spoken language understanding system that learns from interaction with and demonstrations from the user, allows the use of such a system in different settings and for different types of speech, even for deviant or impaired speech, while also allowing the user to choose a phrasing.

- Categories:

15 Views

15 Views

- Read more about Multimodal One-shot Learning of Speech and Images

- Log in to post comments

Image a robot is shown new concepts visually together with spoken tags, e.g. "milk", "eggs", "butter". After seeing one paired audiovisual example per class, it is shown a new set of unseen instances of these objects, and asked to pick the "milk". Without receiving any hard labels, could it learn to match the new continuous speech input to the correct visual instance? Although unimodal one-shot learning has been studied, where one labelled example in a single modality is given per class, this example motivates multimodal one-shot learning.

- Categories:

9 Views

9 Views

- Read more about Context-aware Neural-based Dialog Act Classification On Automatically Generated Transcriptions

- Log in to post comments

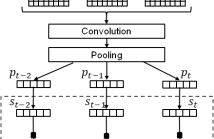

This paper presents our latest investigations on dialog act (DA) classification on automatically generated transcriptions. We propose a novel approach that combines convolutional neural networks (CNNs) and conditional random fields (CRFs) for context modeling in DA classification. We explore the impact of transcriptions generated from different automatic speech recognition systems such as hybrid TDNN/HMM and End-to-End systems on the final performance. Experimental results on two benchmark datasets (MRDA and SwDA) show that the combination CNN and CRF improves consistently the accuracy.

- Categories:

20 Views

20 Views