The 2018 IEEE Data Science Workshop is a new workshop that aims to bring together researchers in academia and industry to share the most recent and exciting advances in data science theory and applications. In particular, the event will gather researchers and practitioners in various academic disciplines of data science, including signal processing, statistics, machine learning, data mining and computer science, along with experts in academic and industrial domains, such as personalized health and medicine, earth and environmental science, applied physics, finance and economics, intelligent manufacturing.

- Read more about SAVE - Space Alternating Variational Estimation for Sparse Bayesian Learning

- Log in to post comments

- Categories:

10 Views

10 Views

- Read more about Nonparametric Distributed Detection Using One-Sample Anderson-Darling Test and p-value Fusion

- Log in to post comments

In this paper a method for distributed detection for scenarios when there is no explicit knowledge of the probability models associated with the hypotheses is proposed. The underlying distributions are accurately learned from the data by bootstrapping. We propose using a nonparametric one-sample Anderson-Darling test locally at each sensor. The one-sample version of the test gives superior performance in comparison to the two-sample alternative. The local decision statistics, in particular p-values are then sent to a fusion center to make the final decision.

- Categories:

34 Views

34 Views

- Read more about Multi-scale algorithms for optimal transport

- Log in to post comments

Optimal transport is a geometrically intuitive and robust way to quantify differences between probability measures.

It is becoming increasingly popular as numerical tool in image processing, computer vision and machine learning.

A key challenge is its efficient computation, in particular on large problems. Various algorithms exist, tailored to different special cases.

Multi-scale methods can be applied to classical discrete algorithms, as well as entropy regularization techniques. They provide a good compromise between efficiency and flexibility.

- Categories:

23 Views

23 Views

- Read more about Vector compression for similarity search using Multi-layer Sparse Ternary Codes

- Log in to post comments

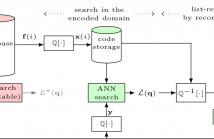

It was shown recently that Sparse Ternary Codes (STC) posses superior ``coding gain'' compared to the classical binary hashing framework and can successfully be used for large-scale search applications. This work extends the STC for compression and proposes a rate-distortion efficient design. We first study a single-layer setup where we show that binary encoding intrinsically suffers from poor compression quality while STC, thanks to the flexibility in design, can have near-optimal rate allocation. We further show that single-layer codes should be limited to very low rates.

- Categories:

14 Views

14 Views

- Read more about PREDICTIVE MAINTENANCE OF PHOTOVOLTAIC PANELS VIA DEEP LEARNING

- Log in to post comments

We apply convolutional neural networks (CNN) for monitoring the

operation of photovoltaic panels. In particular, we predict the daily

electrical power curve of a photovoltaic panel based on the power

curves of neighboring panels. An exceptionally large deviation between

predicted and actual (observed) power curve indicates a malfunctioning

panel. The problem is challenging because the power

curve depends on many factors such as weather conditions and the

surrounding objects causing shadows with a regular time pattern. We

- Categories:

49 Views

49 Views

In many settings, we can accurately model high-dimensional data as lying in a union of subspaces. Subspace clustering is the process of inferring the subspaces and determining which point belongs to each subspace. In this paper we study a ro- bust variant of sparse subspace clustering (SSC). While SSC is well-understood when there is little or no noise, less is known about SSC under significant noise or missing en- tries. We establish clustering guarantees in the presence of corrupted or missing entries.

- Categories:

152 Views

152 Views

- Read more about Convolutional Neural Networks via Node-Varying Graph Filters

- Log in to post comments

Convolutional neural networks (CNNs) are being applied to an increasing number of problems and fields due to their superior performance in classification and regression tasks. Since two of the key operations that CNNs implement are convolution and pooling, this type of networks is implicitly designed to act on data described by regular structures such as images. Motivated by the recent interest in processing signals defined in irregular domains, we advocate a CNN architecture that operates on signals supported on graphs.

- Categories:

40 Views

40 Views

- Read more about Deep CNN Sparse Coding Analysis

- Log in to post comments

Deep Convolutional Sparse Coding (D-CSC) is a framework reminiscent

of deep convolutional neural nets (DCNN), but by omitting the learning of the

dictionaries one can more transparently analyse the role of the

activation function and its ability to recover activation paths

through the layers. Papyan, Romano, and Elad conducted an analysis of

such an architecture \cite{2016arXiv160708194P}, demonstrated the

relationship with DCNNs and proved conditions under which a D-CSC is

guaranteed to recover activation paths. A technical innovation of

- Categories:

59 Views

59 Views

- Read more about MOTIFNET: A MOTIF-BASED GRAPH CONVOLUTIONAL NETWORK FOR DIRECTED GRAPHS

- Log in to post comments

Deep learning on graphs and in particular, graph convolutional neural networks, have recently attracted significant attention in the machine learning community. Many of such

MotifNet.pdf

- Categories:

219 Views

219 Views

- Read more about Alternating autoencoders for matrix completion

- Log in to post comments

We consider autoencoders (AEs) for matrix completion (MC) with application to collaborative filtering (CF) for recommedation systems. It is observed that for a given sparse user-item rating matrix, denoted asM, an AE performs matrix factorization so that the recovered matrix is represented as a product of user and item feature matrices.

Poster_Lee.pdf

- Categories:

30 Views

30 Views