- Read more about FANTOM: Federated Adversarial Network for Training Multi-sequence Magnetic Resonance Imaging in Semantic Segmentation

- Log in to post comments

Ischemic stroke lesions (ISL) segmentation aids clinicians in the diagnosis of stroke in acute care units. But, a generalized segmentation model requires data from various patients. However considering the data privacy, the patient's data is not available for centralized training. The Federated Learning (FL) framework overcomes this, but in FL, semantic segmentation is challenging due to the complex model, adversarial training, and non-independent and identically distributed dataset.

- Categories:

36 Views

36 Views

- Read more about Improving Medical Dialogue Generation with Abstract Meaning Representations

- Log in to post comments

Medical Dialogue Generation plays a critical role in telemedicine by facilitating the dissemination of medical expertise to patients. Existing studies focus on incorporating textual representations, which have limited their ability to represent text semantics, such as ignoring important medical entities.

- Categories:

16 Views

16 Views

- Read more about Unravel Anomalies: An End-to-end Seasonal-Trend Decomposition Approach for Time Series Anomaly Detection

- Log in to post comments

Traditional Time-series Anomaly Detection (TAD) methods often struggle with the composite nature of complex time-series data and a diverse array of anomalies. We introduce TADNet, an end-to-end TAD model that leverages Seasonal-Trend Decomposition to link various types of anomalies to specific decomposition components, thereby simplifying the analysis of complex time-series and enhancing detection performance. Our training methodology, which includes pre-training on a synthetic dataset followed by fine-tuning, strikes a balance between effective decomposition and precise anomaly detection.

- Categories:

77 Views

77 Views

- Read more about ACTIVE EXPLAINABLE RECOMMENDATION WITH LIMITED LABELING BUDGETS

- Log in to post comments

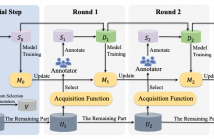

Explainable recommendation has gained significant attention due to its potential to enhance user trust and system transparency. Previous studies primarily focus on refining model architectures to generate more informative explanations, assuming that the explanation data is sufficient and easy to acquire. However, in practice, obtaining the ground truth for explanations can be costly since individuals may not be inclined to put additional efforts to provide behavior explanations.

- Categories:

59 Views

59 Views

- Read more about HIM: DISCOVERING IMPLICIT RELATIONSHIPS IN HETEROGENEOUS SOCIAL NETWORKS

- Log in to post comments

To date, research on relation mining has typically focused on analyzing explicit relationships between entities, while ignoring the underlying connections between entities, known as implicit relationships. Exploring implicit relationships can reveal more about social dynamics and potential relationships in heterogeneous social networks to better explain complex social behaviors. The research presented in this paper explores implicit relationships discovery methods in the context of heterogeneous social networks.

- Categories:

136 Views

136 Views

- Read more about SnappyR: A New High-Speed Lossless Data Compression Algorithm

- Log in to post comments

- Categories:

117 Views

117 Views

- Read more about LZ4r - A New Fast Compression Algorithm for High-Speed Data Storage Systems

- Log in to post comments

- Categories:

102 Views

102 Views

- Read more about Probabilistic Fine-grained Urban Flow Inference with Normalizing Flows

- Log in to post comments

Fine-grained urban flow inference (FUFI) aims at enhancing the resolution of traffic flow, which plays an important role in intelligent traffic management. Existing FUFI methods are mainly based on techniques from image super-resolution (SR) models, which cannot fully capture the influence of external factors and face the ill-posed problem in SR tasks. In this paper, we propose UFI-Flow – Urban Flow Inference via normalizing Flow, a novel model for addressing the FUFI problem in a principled manner by using a single probabilistic loss.

ICASSP_slides.pptx

ICASSP_5513_poster.pdf

- Categories:

37 Views

37 Views

- Read more about Fast and Compact Set Intersection through Recursive Universe Partitioning

- 4 comments

- Log in to post comments

We present a data structure that encodes a sorted integer sequence in small space allowing, at the same time, fast intersection operations. The data layout is carefully designed to exploit word-level parallelism and SIMD instructions, hence providing good practical performance. The core algorithmic idea is that of recursive partitioning the universe of representation: a markedly different paradigm than the widespread strategy of partitioning the sequence based on its length.

- Categories:

106 Views

106 Views

- Read more about Compressing and Randomly Accessing Sequences

- 1 comment

- Log in to post comments

In this paper we consider the problem of storing sequences of symbols in

a compressed format, while supporting random access to the symbols without

decompression. Although this is a well-studied problem when the data is

textual, the kind of sequences we look at are not textual, and we argue

that traditional compression methods used in the text algorithms community

(such as compressors targeting $k$-th order empirical entropy) do not

perform as well on these sequential data, and simpler methods such

- Categories:

132 Views

132 Views