- Read more about Adversarial Robustness for Deep Metric Learning

- Log in to post comments

Deep Metric Learning (DML) based on Convolutional Neural Networks (CNNs) is vulnerable to adversarial attacks. Adversarial training, where adversarial samples are generated at each iteration, is one of the prominent defense techniques for robust DML. However, adversarial training increases computational complexity and causes a trade-off between robustness and generalization. This study proposes a lightweight, robust DML framework that learns a non-linear projection to map the embeddings of a CNN into an adversarially robust space.

- Categories:

16 Views

16 Views

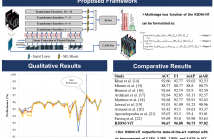

Deepfake detection is critical in mitigating the societal threats posed by manipulated videos. While various algorithms have been developed for this purpose, challenges arise when detectors operate externally, such as on smartphones, when users take a photo of deepfake images and upload on the Internet. One significant challenge in such scenarios is the presence of Moire patterns, which degrade image quality and confound conventional classification algorithms, including deep neural networks (DNNs). The impact of Moire patterns remains largely unexplored for deepfake detectors.

- Categories:

28 Views

28 Views

- Read more about STRENGTHENING DEEP LEARNING MODEL FOR ROBUST SCREENING OF VOLUMETRIC CHEST RADIOGRAPHIC SCANS

- 1 comment

- Log in to post comments

The emerging deep learning algorithms have shown significant potential in the development of efficient computer aided diagnosis tools for automated detection of lung infections using chest radiographs. However, many existing methods are slice-based and require manual selection of appropriate slices from the entire CT scan, which is tedious and requires expert radiologists.

- Categories:

14 Views

14 Views

- Read more about Recurrent 3-D Multi-level Visual Transformer for Joint Classification of Heterogeneous 2-D and 3-D Radiographic Data

- 1 comment

- Log in to post comments

Recent advancements in artificial intelligence algorithms for medical imaging show significant potential in automating the detection of lung infections from chest radiograph scans. However, current approaches often focus solely on either 2-D or 3-D scans, failing to leverage the combined advantages of both modalities. Moreover, conventional slice-based methods place a manual burden on radiologists for slice selection.

- Categories:

36 Views

36 Views

- Read more about SEMI-SUPERVISED GRAPHICAL DEEP DICTIONARY LEARNING FOR HYPERSPECTRAL IMAGE CLASSIFICATION FROM LIMITED SAMPLES

- Log in to post comments

In this work, we propose a semi-supervised deep feature generation network that accounts for local similarities. It is based on the deep dictionary learning (DDL) framework. The formulation accounts for two unique aspects of hyperspectral classification. First, the fact that the total number of pixels / samples to be labeled is constant; this allows for a semi-supervised formulation allowing only a few pixels / samples to be labeled as training data. Second, the samples / pixels are spatially correlated; this leads to a graph regularization formulation.

- Categories:

12 Views

12 Views

- Read more about FOLLOWING THE EMBEDDING: IDENTIFYING TRANSITION PHENOMENA IN WAV2VEC 2.0 REPRESENTATIONS OF SPEECH AUDIO

- Log in to post comments

Although transformer-based models have improved the state-of-the-art in speech recognition, it is still not well understood what information from the speech signal these models encode in their latent representations. This study investigates the potential of using labelled data (TIMIT) to probe wav2vec 2.0 embeddings for insights into the encoding and visualisation of speech signal information at phone boundaries. Our experiment involves training probing models to detect phone-specific articulatory features in the hidden layers based on IPA classifications.

- Categories:

91 Views

91 Views

- Read more about ENHANCING NOISY LABEL LEARNING VIA UNSUPERVISED CONTRASTIVE LOSS WITH LABEL CORRECTION BASED ON PRIOR KNOWLEDGE

- Log in to post comments

To alleviate the negative impacts of noisy labels, most of the noisy label learning (NLL) methods dynamically divide the training data into two types, “clean samples” and “noisy samples”, in the training process. However, the conventional selection of clean samples heavily depends on the features learned in the early stages of training, making it difficult to guarantee the cleanliness of the selected samples in scenarios where the noise ratio is high.

- Categories:

80 Views

80 Views

- Read more about A_Binary_BP_Decoding_using_Posterior_Adjustment_for_Quantum_LDPC_Codes

- Log in to post comments

Although BP decoders are efficient and provide significant

performance for classical low-density parity-check (LDPC)

codes, they will suffer a degradation in performance for quantum

LDPC (QLDPC) codes due to the limitations in the quantum

field. In this paper, we propose a posterior adjustment of

either a single qubit or multiple qubits within binary belief

propagation (BP). The adjustment process changes the posterior

likelihood ratio for one or multiple qubits according to the

- Categories:

82 Views

82 Views

- Read more about Inference of genetic effects via Approximate Message Passing

- Log in to post comments

Efficient utilization of large-scale biobank data is crucial for inferring the genetic basis of disease and predicting health outcomes from the DNA. Yet we lack efficient, accurate methods that scale to data where electronic health records are linked to whole genome sequence information. To address this issue, our paper develops a new algorithmic paradigm based on Approximate Message Passing (AMP), which is specifically tailored for genomic prediction and association testing.

- Categories:

17 Views

17 Views

- Read more about EFFICIENT VIDEO AND AUDIO PROCESSING WITH LOIHI 2

- Log in to post comments

Loihi 2 is a fully event-based neuromorphic processor that supports a wide range of synaptic connectivity configurations and temporal neuron dynamics. Loihi 2's temporal and event-based paradigm is naturally well-suited to processing data from an event-based sensor, such as a Dynamic Vision Sensor (DVS) or a Silicon Cochlea. However, this begs the question: How general are signal processing efficiency gains on Loihi 2 versus conventional computer architectures?

- Categories:

107 Views

107 Views