- Read more about Generic Bounds on the Maximum Deviations in Sequential/Sequence Prediction (and the Implications in Recursive Algorithms and Learning/Generalization)

- Log in to post comments

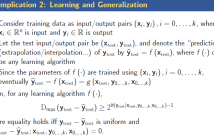

In this paper, we derive generic bounds on the maximum deviations in prediction errors for sequential prediction via an information-theoretic approach. The fundamental bounds are shown to depend only on the conditional entropy of the data point to be predicted given the previous data points. In the asymptotic case, the bounds are achieved if and only if the prediction error is white and uniformly distributed.

- Categories:

54 Views

54 Views

- Read more about Minimax Active Learning via Minimal Model Capacity

- Log in to post comments

Active learning is a form of machine learning which combines supervised learning and feedback to minimize the training set size, subject to low generalization errors. Since direct optimization of the generalization error is difficult, many heuristics have been developed which lack a firm theoretical foundation. In this paper, a new information theoretic criterion is proposed based on a minimax log-loss regret formulation of the active learning problem. In the first part of this paper, a Redundancy Capacity theorem for active learning is derived along with an optimal learner.

- Categories:

98 Views

98 Views

- Read more about ONLINE ANOMALY DETECTION IN MULTIVARIATE SETTINGS

- Log in to post comments

- Categories:

29 Views

29 Views

- Read more about Variational and Hierarchical Recurrent Autoencoder

- Log in to post comments

Despite a great success in learning representation for image data, it is challenging to learn the stochastic latent features from natural language based on variational inference. The difficulty in stochastic sequential learning is due to the posterior collapse caused by an autoregressive decoder which is prone to be too strong to learn sufficient latent information during optimization. To compensate this weakness in learning procedure, a sophisticated latent structure is required to assure good convergence so that random features are sufficiently captured for sequential decoding.

- Categories:

23 Views

23 Views

- Read more about BAYESIAN QUICKEST CHANGE POINT DETECTION WITH MULTIPLE CANDIDATES OF POST-CHANGE MODELS

- Log in to post comments

We study the quickest change point detection for systems with multiple possible post-change models. A change point is the time instant at which the distribution of a random process changes. We consider the case that the post-change model is from a finite set of possible models. Under the Bayesian setting, the objective is to minimize the average detection delay (ADD), subject to upper bounds on the probability of false alarm (PFA). The proposed algorithm is a threshold-based sequential test.

- Categories:

20 Views

20 Views

- Read more about Topological Interference Alignment via Generalized Low-Rank Optimization with Sequential Convex Approximations

- Log in to post comments

- Categories:

8 Views

8 Views

- Read more about Topological Interference Alignment via Generalized Low-Rank Optimization with Sequential Convex Approximations

- Log in to post comments

- Categories:

5 Views

5 Views

- Read more about SEQUENTIAL MAXIMUM MARGIN CLASSIFIERS FOR PARTIALLY LABELED DATA

- Log in to post comments

poster.pdf

- Categories:

8 Views

8 Views

We introduce a sequential Bayesian binary hypothesis testing problem under social learning, termed selfish learning, where agents work to maximize their individual rewards. In particular, each agent receives a private signal and is aware of decisions made by earlier-acting agents. Beside inferring the underlying hypothesis, agents also decide whether to stop and declare, or pass the inference to the next agent. The employer rewards only correct responses and the reward per worker decreases with the number of employees used for decision making.

- Categories:

15 Views

15 Views

- Categories:

8 Views

8 Views