- Read more about High-dimensional embedding denoising autoencoding prior for color image restoration

- Log in to post comments

This work exploits the basic denoising autoencoding (DAE) as enhanced priori for color image restoration (IR). The proposed method consists of two steps: enhanced DAE network learning and iterative restoration. To be special, at the training phase, a denoising network taking 6-dimensional variable as input is trained. Then, the network-driven high-dimensional prior information embedded DAE priori is utilized in the iterative restoration procedure. We first map the intermediate color image to be 6 dimensional and employ the higher-dimensional network to handle its corrupted version.

- Categories:

62 Views

62 Views

- Read more about High-Resolution Class Activation Mapping

- Log in to post comments

Insufficient reasoning for their predictions has for long been a major drawback of neural networks and has proved to be a major obstacle for their adoption by several fields of application. This paper presents a framework for discriminative localization, which helps shed some light into the decision-making of Convolutional Neural Networks (CNN). Our framework generates robust, refined and high-quality Class Activation Maps, without impacting the CNN’s performance.

- Categories:

136 Views

136 Views

- Read more about INFORMATIVE FRAME CLASSIFICATION OF ENDOSCOPIC VIDEOS USING CONVOLUTIONAL NEURAL NETWORKS AND HIDDEN MARKOV MODELS

- Log in to post comments

The goal of endoscopic analysis is to find abnormal lesions and determine further therapy from the obtained information. However, the procedure produces a variety of non-informative frames and lesions can be missed due to poor video quality. Especially when analyzing entire endoscopic videos made by non-expert endoscopists, informative frame classification is crucial to e.g. video quality grading. This work concentrates on the design of an automated indication of informativeness of video frames.

- Categories:

64 Views

64 Views

- Read more about ARCHITECTURE-AWARE NETWORK PRUNING FOR VISION QUALITY APPLICATIONS

- Log in to post comments

Convolutional neural network (CNN) delivers impressive achievements in computer vision and machine learning field. However, CNN incurs high computational complexity, especially for vision quality applications because of large image resolution. In this paper, we propose an iterative architecture-aware pruning algorithm with adaptive magnitude threshold while cooperating with quality-metric measurement simultaneously. We show the performance improvement applied on vision quality applications and provide comprehensive analysis with flexible pruning configuration.

- Categories:

82 Views

82 Views

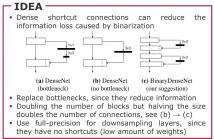

Binary neural networks are a promising approach to execute convolutional neural networks on devices with low computational power. Previous work on this subject often quantizes pretrained full-precision models and uses complex training strategies. In our work, we focus on increasing the performance of binary neural networks by training from scratch with a simple training strategy. In our experiments we show that we are able to achieve state-of-the-art results on standard benchmark datasets.

poster.pdf

- Categories:

61 Views

61 Views

This document includes the slides of the ICIP2019 presentation of the publication "DVDnet: A Fast Network for Deep Video Denoising".

- Categories:

148 Views

148 Views

- Read more about EFFICIENT FINE-TUNING OF NEURAL NETWORKS FOR ARTIFACT REMOVAL IN DEEP LEARNING FOR INVERSE IMAGING PROBLEMS

- Log in to post comments

While Deep Neural Networks trained for solving inverse imaging problems (such as super-resolution, denoising, or inpainting tasks) regularly achieve new state-of-the-art restoration performance, this increase in performance is often accompanied with undesired artifacts generated in their solution. These artifacts are usually specific to the type of neural network architecture, training, or test input image used for the inverse imaging problem at hand. In this paper, we propose a fast, efficient post-processing method for reducing these artifacts.

- Categories:

30 Views

30 Views

- Read more about Speech Emotion Recognition Using Multi-hop Attention Mechanism

- Log in to post comments

In this paper, we are interested in exploiting textual and acoustic data of an utterance for the speech emotion classification task. The baseline approach models the information from audio and text independently using two deep neural networks (DNNs). The outputs from both the DNNs are then fused for classification. As opposed to using knowledge from both the modalities separately, we propose a framework to exploit acoustic information in tandem with lexical data.

- Categories:

266 Views

266 Views

- Read more about Aggregation Graph Neural Networks

- Log in to post comments

Graph neural networks (GNNs) regularize classical neural networks by exploiting the underlying irregular structure supporting graph data, extending its application to broader data domains. The aggregation GNN presented here is a novel GNN that exploits the fact that the data collected at a single node by means of successive local exchanges with neighbors exhibits a regular structure. Thus, regular convolution and regular pooling yield an appropriately regularized GNN.

- Categories:

43 Views

43 Views