ICASSP is the world’s largest and most comprehensive technical conference focused on signal processing and its applications. The 2019 conference will feature world-class presentations by internationally renowned speakers, cutting-edge session topics and provide a fantastic opportunity to network with like-minded professionals from around the world. Visit website.

- Read more about Cyborg Speech: Deep Multilingual Speech Synthesis for Generating Segmental Foreign Accent with Natural Prosody

- Log in to post comments

We describe a new application of deep-learning-based speech synthesis, namely multilingual speech synthesis for generating controllable foreign accent. Specifically, we train a DBLSTM-based acoustic model on non-accented multilingual speech recordings from a speaker native in several languages. By copying durations and pitch contours from a pre-recorded utterance of the desired prompt, natural prosody is achieved. We call this paradigm "cyborg speech" as it combines human and machine speech parameters.

- Categories:

39 Views

39 Views

- Read more about Invisible Geo-Location Signature in a Single Image

- Log in to post comments

Geo-tagging images of interest is increasingly important to law enforcement, national security, and journalism. Many images today do not carry location tags that are trustworthy and resilient to tampering; and the landmark-based visual clues may not be readily present in every image, especially in those taken indoors. In this paper, we exploit an invisible signature from the power grid, the Electric Network Frequency (ENF) signal, which can be inherently recorded in a sensing stream at the time of capturing and carries useful location information.

- Categories:

54 Views

54 Views

- Read more about LEARNED FORENSIC SOURCE SIMILARITY FOR UNKNOWN CAMERA MODELS

- Log in to post comments

Information about an image's source camera model is important knowledge in many forensic investigations. In this paper we propose a system that compares two image patches to determine if they were captured by the same camera model. To do this, we first train a CNN based feature extractor to output generic, high level features which encode information about the source camera model of an image patch. Then, we learn a similarity measure that maps pairs of these features to a score indicating whether the two image patches were captured by the same or different camera models.

main.pdf

- Categories:

62 Views

62 Views

- Read more about Effective Noise Removal and Unified Model of Hybrid Feature Space Optimization for Automated Cardiac Anomaly Detection using phonocardiogarm signals

- Log in to post comments

In this paper, we present completely automated cardiac anomaly detection for remote screening of cardio-vascular abnormality using Phonocardiogram (PCG) or heart sound signal. Even though PCG contains significant and vital cardiac health information and cardiac abnormality signature, the presence of substantial noise does not guarantee highly effective analysis of cardiac condition. Our proposed method intelligently identifies and eliminates noisy PCG signal and consequently detects pathological abnormality condition. We further present a unified model of hybrid feature selection method.

- Categories:

32 Views

32 Views

- Read more about ACCELERATING RECURRENT NEURAL NETWORK LANGUAGE MODEL BASED ONLINE SPEECH RECOGNITION SYSTEM

- Log in to post comments

This paper presents methods to accelerate recurrent neural network based language models (RNNLMs) for online speech recognition systems.

Firstly, a lossy compression of the past hidden layer outputs (history vector) with caching is introduced in order to reduce the number of LM queries.

Next, RNNLM computations are deployed in a CPU-GPU hybrid manner, which computes each layer of the model on a more advantageous platform.

The added overhead by data exchanges between CPU and GPU is compensated through a frame-wise batching strategy.

- Categories:

13 Views

13 Views

- Read more about On the Comparison of Two Room Compensation / Dereverberation Methods Employing Active Acoustic Boundary Absorption

- Log in to post comments

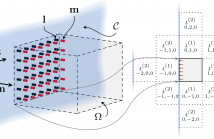

In this paper, we compare the performance of two active dereverberation techniques using a planar array of microphones and loudspeakers. The two techniques are based on a solution to the Kirchhoff-Helmholtz Integral Equation (KHIE). We adapt a Wave Field Synthesis (WFS) based method to the application of real-time 3D dereverberation by using a low-latency pre-filter design. The use of First-Order Differential (FOD) models is also proposed as an alternative method to the use of monopoles with WFS and which does not assume knowledge of the room geometry or primary sources.

- Categories:

23 Views

23 Views

Clustering is widely used for exploratory data analysis in a variety of applications. Traditionally clustering is studied as an unsupervised task where no human inputs are provided. A recent trend in clustering is to leverage user provided side information to better infer the clustering structure in data. In this paper, we propose a probabilistic graphical model that allows user to provide as input the desired cluster sizes, namely the cardinality constraints. Our model also incorporates a flexible mechanism to inject control of the crispness of the clusters.

- Categories:

24 Views

24 Views

- Read more about JOINT BAYESIAN ESTIMATION OF TIME-VARYING LP PARAMETERS AND EXCITATION FOR SPEECH

- Log in to post comments

We consider the joint estimation of time-varying linear prediction (TVLP) filter coefficients and the excitation signal parameters for the analysis of long-term speech segments. Traditional approaches to TVLP estimation assume linear expansion of the coefficients in a set of known basis functions only. But, excitation signal is also time-varying, which affects the estimation of TVLP filter parameters. In this paper, we propose a Bayesian approach, to incorporate the nature of excitation signal and also adapt regularization of the filter parameters.

poster.pdf

- Categories:

30 Views

30 Views

- Read more about Multiple-input neural network-based residual echo suppression

- Log in to post comments

A residual echo suppressor (RES) aims to suppress the residual echo in the output of an acoustic echo canceler (AEC). Spectral-based RES approaches typically estimate the magnitude spectra of the near-end speech and the residual echo from a single input, that is either the far-end speech or the echo computed by the AEC, and derive the RES filter coefficients accordingly. These single inputs do not always suffice to discriminate the near-end speech from the remaining echo.

- Categories:

34 Views

34 Views

- Read more about Deep learning for predicting image memorability

- Log in to post comments

Memorability of media content such as images and videos has recently become an important research subject in computer vision. This paper presents our computation model for predicting image memorability, which is based on a deep learning architecture designed for a classification task. We exploit the use of both convolutional neural network (CNN) - based visual features and semantic features related to image captioning for the task. We train and test our model on the large-scale benchmarking memorability dataset: LaMem.

- Categories:

128 Views

128 Views