ICASSP is the world’s largest and most comprehensive technical conference focused on signal processing and its applications. The 2019 conference will feature world-class presentations by internationally renowned speakers, cutting-edge session topics and provide a fantastic opportunity to network with like-minded professionals from around the world. Visit website.

- Read more about On the Role of the Bounded Lemma in the SDP Formulation of Atomic Norm Problems

- Log in to post comments

- Categories:

85 Views

85 Views

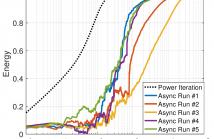

- Read more about THE ASYNCHRONOUS POWER ITERATION: A GRAPH SIGNAL PERSPECTIVE

- Log in to post comments

- Categories:

68 Views

68 Views

- Read more about Shift-Invariant Kernel Additive Modelling for Audio Source Separation

- 1 comment

- Log in to post comments

A major goal in blind source separation to identify and separate sources is to model their inherent characteristics. While most state-of- the-art approaches are supervised methods trained on large datasets, interest in non-data-driven approaches such as Kernel Additive Modelling (KAM) remains high due to their interpretability and adaptability. KAM performs the separation of a given source applying robust statistics on the time-frequency bins selected by a source-specific kernel function, commonly the K-NN function.

dfy_poster.pdf

- Categories:

8 Views

8 Views

Project-based learning is a form of active learning where large-scale projects provide context for technical learning. Along with background information, this paper examines teaching and learning of signals and systems in the context of two ABET accredited project-based learning programs. Examples of projects, deep learning activities and classroom activities are provided.

- Categories:

218 Views

218 Views

- Read more about AUGMENTED DATA AND IMPROVED NOISE RESIDUAL-BASED CNN FOR PRINTER SOURCE IDENTIFICATION

- Log in to post comments

- Categories:

29 Views

29 Views

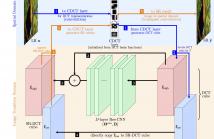

- Read more about RATE-DISTORTION OPTIMIZED ILLUMINATION ESTIMATION FOR WAVELET-BASED VIDEO CODING

- Log in to post comments

We propose a rate-distortion optimized framework for estimating

illumination changes (lighting variations, fade in/out

effects) in a highly scalable coding system. Illumination

variations are realized using multiplicative factors in the image

domain and are estimated considering the coding cost

of the illumination field and input frames which are first

subject to a temporal Lifting-based Illumination Adaptive

Transform (LIAT). The coding cost is modelled by an L1-

norm optimization problem which is derived to approximate

- Categories:

7 Views

7 Views

- Read more about ORTHOGONALLY REGULARIZED DEEP NETWORKS FOR IMAGE SUPER-RESOLUTION

- Log in to post comments

- Categories:

10 Views

10 Views

- Read more about Whole Sentence Neural Language Model

- Log in to post comments

Recurrent neural networks have become increasingly popular for the task of language modeling achieving impressive gains in state-of-the-art speech recognition and natural language processing (NLP) tasks. Recurrent models exploit word dependencies over a much longer context window (as retained by the history states) than what is feasible with n-gram language models.

- Categories:

99 Views

99 Views