- Read more about ADA-SISE: ADAPTIVE SEMANTIC INPUT SAMPLING FOR EFFICIENT EXPLANATION OF CONVOLUTIONAL NEURAL NETWORKS

- Log in to post comments

Explainable AI (XAI) is an active research area to interpret a neural network’s decision by ensuring transparency and trust in the task-specified learned models.Recently,perturbation-based model analysis has shown better interpretation, but back-propagation techniques are still prevailing because of their computational efficiency. In this work, we combine both approaches as a hybrid visual explanation algorithm and propose an efficient interpretation method for convolutional neural networks.

- Categories:

11 Views

11 Views

- Read more about Fusion Neural Network for Vehicle Trajectory Prediction in Autonomous Driving

- Log in to post comments

- Categories:

12 Views

12 Views

- Read more about CHANNEL-WISE MIX-FUSION DEEP NEURAL NETWORKS FOR ZERO-SHOT LEARNING

- Log in to post comments

- Categories:

10 Views

10 Views

- Read more about Complex-Valued Vs. Real-Valued Neural Networks for Classification Perspectives: An Example on Non-Circular Data

- Log in to post comments

This paper shows the benefits of using Complex-Valued Neural Network (CVNN) on classification tasks for non-circular complex-valued datasets. Motivated by radar and especially Synthetic Aperture Radar (SAR) applications, we propose a statistical analysis of fully connected feed-forward neural networks performance in the cases where real and imaginary parts of the data are correlated through the non-circular property.

- Categories:

42 Views

42 Views

- Read more about BLEND-RES^2NET: Blended Representation Space by Transformation of Residual Mapping with Restrained Learning For Time Series Classification

- Log in to post comments

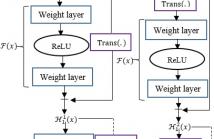

The typical problem like insufficient training instances in time series classification task demands for novel deep neural architecture to warrant consistent and accurate performance. Deep Residual Network (ResNet) learns through H(x)=F(x)+x, where F(x) is a nonlinear function. We propose Blend-Res2Net that blends two different representation spaces: H^1 (x)=F(x)+Trans(x) and H^2 (x)=F(Trans(x))+x with the intention of learning over richer representation by capturing the temporal as well as the spectral signatures (Trans(∙) represents the transformation function).

- Categories:

20 Views

20 Views

- Read more about STEP-GAN: A One-Class Anomaly Detection Model with Applications to Power System Security

- Log in to post comments

- Categories:

11 Views

11 Views

- Read more about DEEP LEARNING BASED HYBRID PRECODING IN DUAL-BAND COMMUNICATION SYSTEMS

- Log in to post comments

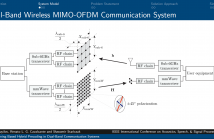

We propose a deep learning-based method that uses spatial and temporal information extracted from the sub-6GHz band to predict/track beams in the millimeter-wave (mmWave) band. In more detail, we consider a dual-band communication system operating in both the sub-6GHz and mmWave bands. The objective is to maximize the achievable mutual information in the mmWave band with a hybrid analog/digital architecture where analog precoders (RF precoders) are taken from a finite codebook.

- Categories:

11 Views

11 Views

- Read more about Robust Domain-Free Domain Generalization with Class-Aware Alignment

- Log in to post comments

While deep neural networks demonstrate state-of-the-art performance on a variety of learning tasks, their performance relies on the assumption that train and test distributions are the same, which may not hold in real-world applications. Domain generalization addresses this issue by employing multiple source domains to build robust models that can generalize to unseen target domains subject to shifts in data distribution.

DFDG_slides.pdf

DFDG_poster.pdf

- Categories:

30 Views

30 Views

- Read more about LAYER-WISE INTERPRETATION OF DEEP NEURAL NETWORKS USING IDENTITY INITIALIZATION

- Log in to post comments

Poster.pdf

- Categories:

8 Views

8 Views

- Read more about Task-aware neural architecture search

- Log in to post comments

The design of handcrafted neural networks requires a lot of time and resources. Recent techniques in Neural Architecture Search (NAS) have proven to be competitive or better than traditional handcrafted design, although they require domain knowledge and have generally used limited search spaces. In this paper, we propose a novel framework for neural architecture search, utilizing a dictionary of models of base tasks and the similarity between the target task and the atoms of the dictionary; hence, generating an adaptive search space based on the base models of the dictionary.

icassp_poster.pdf

- Categories:

12 Views

12 Views