- Read more about Class Specific Interpretability in CNN Using Causal Analysis

- Log in to post comments

A singular problem that mars the wide applicability of machine learning (ML) models is the lack of generalizability and interpretability. The ML community is increasingly working on bridging this gap. Prominent among them are methods that study causal significance of features, with techniques such as Average Causal Effect (ACE). In this paper, our objective is to utilize the causal analysis framework to measure the significance level of the features in binary classification task.

- Categories:

29 Views

29 Views

- Read more about Reinforced Curriculum Learning for Autonomous Driving in CARLA

- Log in to post comments

Autonomous Vehicles promise to transport people in a safer, accessible, and even efficient way. Nowadays, real-world autonomous vehicles are build by large teams from big companies with a tremendous amount of engineering effort. Deep Reinforcement Learning can be used instead, without domain experts, to learn end-to-end driving policies. Here, we combine Curriculum Learning with deep reinforcement learning, in order to learn without any prior domain knowledge, an end-to-end competitive driving policy for the CARLA autonomous driving simulator.

- Categories:

62 Views

62 Views

In many of the existing alpha matting implementations, an intermediate representation called a trimap needs to be created manually. To automate the process, we propose a generic neural network for trimap generation based on saliency map detection. Our model multi-modally learns a saliency map and a trimap simultaneously. Because of this structure, the network focuses on reducing the error of the trimap especially within the areas with high salience.

- Categories:

37 Views

37 Views

- Read more about FILTER PRUNING VIA SOFTMAX ATTENTION

- 1 comment

- Log in to post comments

- Categories:

19 Views

19 Views

- Read more about Convex Neural Autoregressive Models: Towards Tractable, Expressive, and Theoretically-Backed Models for Sequential Forecasting and Prediction

- Log in to post comments

Three features are crucial for sequential forecasting and generation models: tractability, expressiveness, and theoretical backing. While neural autoregressive models are relatively tractable and offer powerful predictive and generative capabilities, they often have complex optimization landscapes, and their theoretical properties are not well understood. To address these issues, we present convex formulations of autoregressive models with one hidden layer.

- Categories:

168 Views

168 Views

- Read more about A Consensual Collaborative Learning Method for Remote Sensing Image Classification under Noisy Multi-Labels

- Log in to post comments

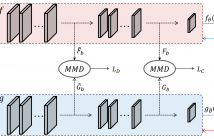

Collecting a large number of reliable training images annotated by multiple land-cover class labels in the framework of multi-label classification is time-consuming and costly in remote sensing (RS). To address this problem, publicly available thematic products are often used for annotating RS images with zero-labeling-cost. However, such an approach may result in constructing a training set with noisy multi-labels, distorting the learning process. To address this problem, we propose a Consensual Collaborative Multi-Label Learning (CCML) method.

- Categories:

19 Views

19 Views

- Read more about An Off-Road Terrain Dataset Including Images Labeled With Measures of Terrain Roughness (Presentation Slides)

- Log in to post comments

- Categories:

31 Views

31 Views

We summarise previous work showing that the basic sigmoid activation function arises as an instance of Bayes’s theorem, and that recurrence follows from the prior. We derive a layer- wise recurrence without the assumptions of previous work, and show that it leads to a standard recurrence with modest modifications to reflect use of log-probabilities. The resulting architecture closely resembles the Li-GRU which is the current state of the art for ASR. Although the contribution is mainly theoretical, we show that it is able to outperform the state of the art on the TIMIT and AMI datasets.

- Categories:

23 Views

23 Views

- Read more about (Poster) Unified Gradient Reweighting for Model Biasing with Applications to Source Separation

- Log in to post comments

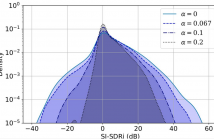

Recent deep learning approaches have shown great improvement in audio source separation tasks. However, the vast majority of such work is focused on improving average separation performance, often neglecting to examine or control the distribution of the results. In this paper, we propose a simple, unified gradient reweighting scheme, with a lightweight modification to bias the learning process of a model and steer it towards a certain distribution of results. More specifically, we reweight the gradient updates of each batch, using a user-specified probability distribution.

- Categories:

22 Views

22 Views

- Read more about Continuous CNN for Nonuniform Time Series

- Log in to post comments

CNN for time series data implicitly assumes that the data are uniformly sampled, whereas many event-based and multi-modal data are nonuniform or have heterogeneous sampling rates. Directly applying regular CNN to nonuniform time series is ungrounded, because it is unable to recognize and extract common patterns from the nonuniform input signals. In this paper, we propose the Continuous CNN (\myname), which estimates the inherent continuous inputs by interpolation, and performs continuous convolution on the continuous input.

- Categories:

30 Views

30 Views