- Transducers

- Spatial and Multichannel Audio

- Source Separation and Signal Enhancement

- Room Acoustics and Acoustic System Modeling

- Network Audio

- Audio for Multimedia

- Audio Processing Systems

- Audio Coding

- Audio Analysis and Synthesis

- Active Noise Control

- Auditory Modeling and Hearing Aids

- Bioacoustics and Medical Acoustics

- Music Signal Processing

- Loudspeaker and Microphone Array Signal Processing

- Echo Cancellation

- Content-Based Audio Processing

- Read more about VOICEFLOW: EFFICIENT TEXT-TO-SPEECH WITH RECTIFIED FLOW MATCHING

- Log in to post comments

Although diffusion models in text-to-speech have become a popular choice due to their strong generative ability, the intrinsic complexity of sampling from diffusion models harms their efficiency. Alternatively, we propose VoiceFlow, an acoustic model that utilizes a rectified flow matching algorithm to achieve high synthesis quality with a limited number of sampling steps. VoiceFlow formulates the process of generating mel-spectrograms into an ordinary differential equation conditional on text inputs, whose vector field is then estimated.

slides.pptx

- Categories:

8 Views

8 Views

- Read more about GTCRN: A Speech Enhancement Model Requiring Ultralow Computational Resources

- Log in to post comments

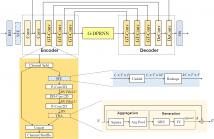

While modern deep learning-based models have significantly outperformed traditional methods in the area of speech enhancement, they often necessitate a lot of parameters and extensive computational power, making them impractical to be deployed on edge devices in real-world applications. In this paper, we introduce Grouped Temporal Convolutional Recurrent Network (GTCRN), which incorporates grouped strategies to efficiently simplify a competitive model, DPCRN. Additionally, it leverages subband feature extraction modules and temporal recurrent attention modules to enhance its performance.

GTCRN_poster.pdf

- Categories:

307 Views

307 Views

- Read more about Adversarial Continual Learning to Transfer Self-Supervised Speech Representations for Voice Pathology Detection

- Log in to post comments

In recent years, voice pathology detection (VPD) has received considerable attention because of the increasing risk of voice problems. Several methods, such as support vector machine and convolutional neural network-based models, achieve good VPD performance. To further improve the performance, we use a self-supervised pretrained model as feature representation instead of explicit speech features. When the pretrained model is fine-tuned for VPD, an overfitting problem occurs due to a domain shift from conversation speech to the VPD task.

- Categories:

27 Views

27 Views

- Read more about DATA DRIVEN GRAPHEME-TO-PHONEME REPRESENTATIONS FOR A LEXICON-FREE TEXT-TO-SPEECH

- Log in to post comments

Grapheme-to-Phoneme (G2P) is an essential first step in any modern, high-quality Text-to-Speech (TTS) system. Most of the current G2P systems rely on carefully hand-crafted lexicons developed by experts. This poses a two-fold problem. Firstly, the lexicons are generated using a fixed phoneme set, usually, ARPABET or IPA, which might not be the most optimal way to represent phonemes for all languages. Secondly, the man-hours required to produce such an expert lexicon are very high.

- Categories:

75 Views

75 Views

- Read more about DATA DRIVEN GRAPHEME-TO-PHONEME REPRESENTATIONS FOR A LEXICON-FREE TEXT-TO-SPEECH

- Log in to post comments

Grapheme-to-Phoneme (G2P) is an essential first step in any modern, high-quality Text-to-Speech (TTS) system. Most of the current G2P systems rely on carefully hand-crafted lexicons developed by experts. This poses a two-fold problem. Firstly, the lexicons are generated using a fixed phoneme set, usually, ARPABET or IPA, which might not be the most optimal way to represent phonemes for all languages. Secondly, the man-hours required to produce such an expert lexicon are very high.

- Categories:

36 Views

36 Views

- Read more about Small-Footprint Convolutional Neural Network with reduced feature map for Voice Activity Detection

- Log in to post comments

By using Voice Activity Detection (VAD) as a preprocessing step, hardware-efficient implementations are possible for speech applications that need to run continuously in severely resource-constrained environments. For this purpose, we propose TinyVAD, which is a new convolutional neural network(CNN) model that executes extremely efficiently with a small memory footprint. TinyVAD uses an input pixel matrix partitioning method, termed patchify, to downscale the resolution of the input spectrogram.

- Categories:

139 Views

139 Views

- Read more about A Flexible Framework for Expectation Maximization-Based MIMO System Identification for Time-Variant Linear Acoustic Systems

- Log in to post comments

Quasi-continuous system identification of time-variant linear acoustic systems can be applied in various audio signal processing applications when numerous acoustic transfer functions must be measured. A prominent application is measuring head-related transfer functions. We treat the underlying multiple-input-multiple-output (MIMO) system identification problem in a state-space model as a joint estimation problem for states, representing impulse responses, and state-space model parameters using the expectation maximization (EM) algorithm.

- Categories:

30 Views

30 Views

- Read more about ZERO SHOT AUDIO TO AUDIO EMOTION TRANSFER WITH SPEAKER DISENTANGLEMENT

- Log in to post comments

The problem of audio-to-audio (A2A) style transfer involves replacing the style features of the source audio with those from the target audio while preserving the content related attributes of the source audio. In this paper, we propose an efficient approach, termed as Zero-shot Emotion Style Transfer (ZEST), that allows the transfer of emotional content present in the given source audio with the one embedded in the target audio while retaining the speaker and speech content from the source.

2401.04511.pdf

- Categories:

122 Views

122 Views

- Read more about Nkululeko

- Log in to post comments

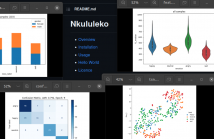

We would like to present Nkululeko, a template based system that lets users perform machine learning experiments in the speaker characteristics domain. It is mainly targeted on users not being familiar with machine learning, or computer programming at all, to being used as a teaching tool or a simple entry level tool to the field of artificial intelligence.

- Categories:

25 Views

25 Views

- Read more about Masking speech contents by random splicing: Is emotional expression preserved?

- Log in to post comments

We discuss the influence of random splicing on the perception of emotional expression in speech signals.

Random splicing is the randomized reconstruction of short audio snippets with the aim to obfuscate the speech contents.

A part of the German parliament recordings has been random spliced and both versions -- the original and the scrambled ones -- manually labeled with respect to the arousal, valence and dominance dimensions.

Additionally, we run a state-of-the-art transformer-based pre-trained emotional model on the data.

- Categories:

47 Views

47 Views